substack.com - A Lean Approach to AI Case to Withstand Existing Gaps in AI Adoption Strategies

Why AI Stalls at PoCs, how Leadership Reflexes and Vendor Hype Break Delivery, and what a Lean Approach Can Do Differently

Section titled “Why AI Stalls at PoCs, how Leadership Reflexes and Vendor Hype Break Delivery, and what a Lean Approach Can Do Differently”AI is at the same fragile inflection point that software faced decades ago. The technology is accelerating, but most organisations are stuck in outdated delivery models. And the cost of failure is no longer just technical, but strategic.

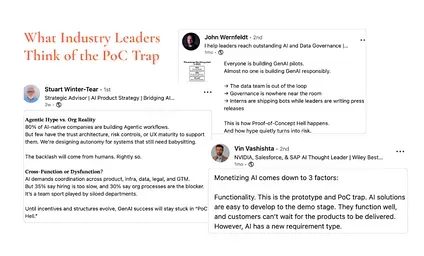

The PoC Problem: Busy, But Going Nowhere

Section titled “The PoC Problem: Busy, But Going Nowhere”One of the clearest signs of trouble? The Proof-of-Concept trap. Many AI teams are stuck in a loop of building demos that never scale. The work looks promising on slides, but it rarely sees the light of day in production. It’s not that the tech doesn’t work, but that no one thought beyond the showcase.

From Davos 2026: Leaders on why scaling AI still feels hard - and what to do about it | Source

Executives greenlight pilots to show movement. Teams build in isolation without a clear path to integration. And before learning from one initiative can compound, the organisation has moved on to the next AI idea. Value stalls, but activity continues, which makes it harder to call out the problem.

Venture capitalists are noticing the pattern too. In recent data infrastructure roundtables and essays, VCs are calling out the fatigue from endless PoCs with no repeatable value. They’re asking harder questions:

What actually went live? Did it move a metric? Is it being reused across the org?

A PoC without a path to production is now viewed less as innovation and more as a distraction.

This shift reflects a broader realisation: the AI bottleneck isn’t the model, but the missing foundations of data architecture, the lack of ownership, and the absence of a repeatable delivery approach. Without that, every AI effort becomes a one-off, and the organisation ends up solving the same problems again and again.

Unsurprisingly, many firms’ AI adoption journey starts with technology and data. ” AI has been a catalyst for companies to really look at their technology,” said Julie Sweet, Chair and Chief Executive Officer of Accenture, with many companies investing in data too. ” The companies that have done this early, like Saudi Aramco – or McDonald’s, which created its data foundation very early – are surging ahead in how they’re using it.”~ Part of World Economic Forum Annual Meeting

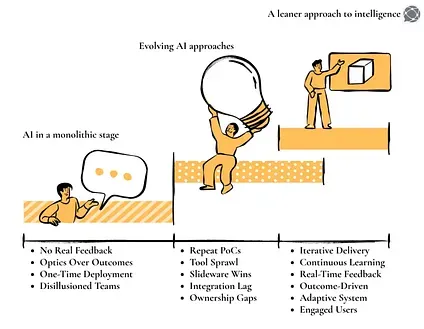

AI Might Be Still Stuck in the Monolith Phase

Section titled “AI Might Be Still Stuck in the Monolith Phase”The AI Hype symptoms? A trail of expensive prototypes, disillusioned teams, and tech that no one uses. These aren’t edge cases. They’re becoming the industry standard. And once you notice the pattern, it’s everywhere.

The phases of AI adoption and how it can reach an ideal state | Source: Authors

The Pattern of Failure: Where AI Efforts Derail

Section titled “The Pattern of Failure: Where AI Efforts Derail”The failures aren’t random. They follow patterns. Once you start looking closely, you’ll notice the same mistakes playing out across different industries, budgets, and use cases.

AI isn’t falling short because the technology is broken. It’s failing because the approach is. These are not edge cases but the mainstream symptoms of how AI is being delivered today.

The “AI Theatre” Phenomenon

Section titled “The “AI Theatre” Phenomenon”Many organisations treat AI as a performance rather than a business capability. A chatbot launched to keep up with peers, a GenAI tool unveiled at a town hall, an AI dashboard built for the board, not the user.

These projects often have high visibility and low utility. They’re not designed to solve a real problem. They’re designed to show that AI is happening.

In one instance, a Fortune 500 bank launches a customer service chatbot, not because of a well-defined service gap, but because everyone else in their market is doing the same.

The bot gets built fast, but poorly. It handles simple FAQs but fails on anything nuanced. Within six months, usage drops to under 5%. Customers bypass it. Support teams work around it. The whole thing fades away and takes along the effort spent.

The problem here isn’t the model. It’s the mindset: tech-first thinking. The organisation led with ” We need AI,” instead of asking, ” What’s the real problem we’re trying to solve?” The result is an expensive, well-marketed tool with no practical fit.

The “Shiny Object” Syndrome

Section titled “The “Shiny Object” Syndrome”The pace of AI innovation is relentless and distracting. Every month brings new models, new APIs, and new capabilities. But in trying to chase all of them, organisations end up committing to none.

A manufacturing firm starts with computer vision for defect detection. Three months later, they pivot to generative AI for quality reports. Then it’s predictive maintenance. Each project is staffed, scoped, demoed, and then dropped.

The underlying issue? Lack of a seed approach, focus, and failure to follow through. With no clear success criteria and production pathways, AI becomes a lab experiment loop.

The “Big Bang” Fallacy

Section titled “The “Big Bang” Fallacy”This one comes straight from legacy IT thinking: the belief that you can plan your way into a successful AI system. Let’s take the situation of a large healthcare network that invests $5 million in a centralised diagnostic AI platform, planning out the rollout across five hospitals over 18 months.

But by the time the system is ready, the workflows have changed, the data is stale, and the models are no longer clinically relevant.

AI doesn’t work like ERP.

Section titled “AI doesn’t work like ERP.”You can’t blueprint it for 18 months and expect it to land cleanly. It needs iteration, real-time feedback, and course correction along the way.

Drowning in Tools, Starving for Standards

Section titled “Drowning in Tools, Starving for Standards”In the AI landscape, especially in GenAI space, every few weeks, there’s a new SDK, a “must-have” vector database, an observability tool that claims to solve hallucinations, and a framework that promises orchestration at scale. On paper, this looks like progress. In practice, it’s an invitation to more paralysis.

Most teams are overwhelmed. They don’t know what they’ve adopted, what’s redundant, or what’s adding any real value. Instead of clarity, they’re buried in integration overhead, overlapping capabilities, and fragmented workflows. Internal documentation can’t keep up, technical debt piles up silently, and no one owns the sprawl. Engineers are busy wiring things together instead of solving business problems.

This isn’t just a tooling problem; it’s a symptom of a deeper one: the lack of a focused, value-driven approach to AI adoption.

Cultural Siloes and Low Internal Readiness

Section titled “Cultural Siloes and Low Internal Readiness”Boston Consulting Group reports 74% of organisations still struggle to derive value from AI, with only 26% showing clear, ongoing ROI (Source). Similarly, BCG’s findings highlight that scaling AI without accounting for data management, governance, and cultural alignment leads to widespread cost overruns and delays (Source). Tech investment without AI-readiness is an investment lost.

🔖 Related Read

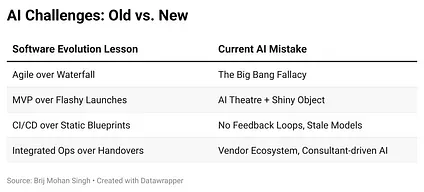

The Changing Landscape of AI Challenges

Section titled “The Changing Landscape of AI Challenges”The current challenges seen in terms of AI adoption were not seen as often before. As technologies change, so do the challenges associated with them.

But why do we see this happen? Let’s dive into the whys of these challenges.

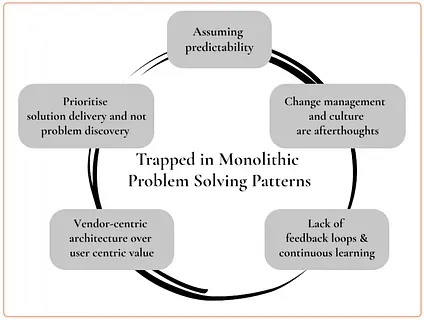

Why Are Leaders Getting AI Delivery Wrong? The Loop of Leadership Missteps

Section titled “Why Are Leaders Getting AI Delivery Wrong? The Loop of Leadership Missteps”Most leadership teams aren’t blindly chasing AI hype but are actively trying to make sense of a fast-moving landscape while balancing pressure from markets, boards, and internal stakeholders.

The intention is rarely the problem. But even with the best intentions, familiar patterns from past tech transformations often get applied to AI without considering what makes it fundamentally different.

These are not failures of intelligence or effort. They’re the result of reflexes shaped over decades of navigating digital transformation. The challenge is that many of those reflexes, like planning for stability, prioritising ROI visibility, or scaling before validating, don’t translate cleanly into AI. And so, without realising it, even the smartest teams fall into familiar loops.

FOMO and Competitive Mimicry

Section titled “FOMO and Competitive Mimicry”The AI race is more psychological than technical. Boardrooms feel the pressure to act, not because the business is ready, but because everyone else seems to be doing something. This competitive mimicry drives decisions that are reactive, not strategic. It’s not about whether the AI solves a real business problem. It’s about being able to say “we’re doing AI too.”

That urgency overrides the rigour. Leaders announce initiatives before the use case is clear, pilot solutions without defining the problem, and chase market optics instead of operational fit.

The “Magic Box” Expectation

Section titled “The “Magic Box” Expectation”There’s still a persistent belief that AI is a black box that can absorb messy problems and spit out optimised answers. This mindset deprioritises the operational scaffolding AI actually depends on: precise problem statements, clean and well-governed data flows, integration into real business workflows, and mechanisms for feedback and iteration. AI isn’t plug-and-play. It’s a system that needs to be engineered, embedded, and continuously refined.

Treat it like a black box, and it will behave like one, opaque, unpredictable, and disconnected from the outcomes it was supposed to drive.

Consultant-Driven AI Transformation

Section titled “Consultant-Driven AI Transformation”External consultants often show up with multi-year AI roadmaps, enterprise-wide blueprints, and transformation frameworks that promise scale before substance. These strategies tend to optimise for scope, not value. They emphasise deliverables over discoveries, and static plans over adaptive learning.

The result? Impressive decks, vague metrics, and a portfolio of projects no one’s really accountable for. Organisations get stuck managing the roadmap instead of building momentum around what’s working.

Media Bias Toward Success Stories

Section titled “Media Bias Toward Success Stories”Most AI stories that make headlines are polished successes, not the messy failures that came before. This creates a survivorship bias in how AI is perceived. Leaders come across instances of AI transforming marketing, operations, and customer service, but not about the countless attempts that didn’t make it past the pilot phase.

This selective storytelling skews expectations. It makes failure feel like an outlier when, in reality, it’s often the default outcome of poorly scoped or poorly integrated efforts.

Obsessing Over Tech Without Understanding the Problem

Section titled “Obsessing Over Tech Without Understanding the Problem”In many engineering-led organisations, there’s a bias toward building sophisticated solutions, even when simpler fixes would work. Teams gravitate toward model development, parameter tuning, and infrastructure design without first validating whether the problem warrants AI at all.

This approach leads to over-engineering and under-delivering. It disconnects AI efforts from the users, use cases, and outcomes that matter. What gets optimised is the model, not the business impact.

🔖 Related Read

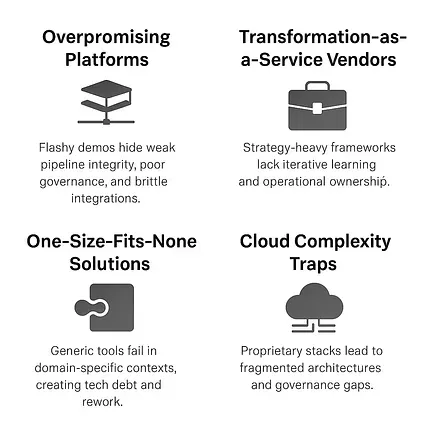

Why Even Good AI Gets Undone by the Wrong Partners

Section titled “Why Even Good AI Gets Undone by the Wrong Partners”The Vendor Ecosystem Problem: Everyone’s Selling AI

Section titled “The Vendor Ecosystem Problem: Everyone’s Selling AI”Few Are Solving for It.

Do you think internal missteps are always hindering the AI efforts? Not really. More often than not, the surrounding ecosystem is adding fuel to the fire.

Platforms promise ready-to-use intelligence, consultants pitch AI-in-a-box roadmaps, and vendors offer generalised solutions to highly specific problems. The result is a market filled with AI that looks complete on paper but falls apart in practice.

Let’s think of the **IBM Watson for Oncology case. **IBM’s $62M Watson for Oncology aimed to revolutionise cancer care, but couldn’t move beyond one institution’s data. Why? No real-world feedback loops. No adaptability. No integration into clinical workflows. A static solution in a dynamic world.

A classic case of ” build it, train it, ship it,” with no lean mechanism for validating or evolving the product in motion.

The vendor ecosystem problem in AI adoption | Source: Authors

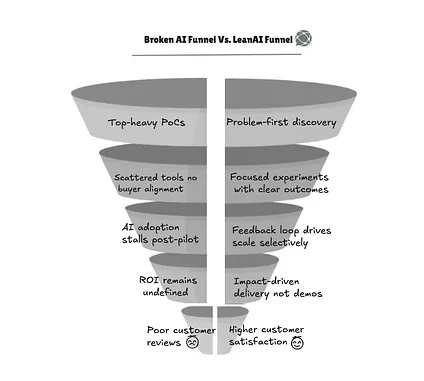

The Cost of Broken AI We No Longer Can Afford

Section titled “The Cost of Broken AI We No Longer Can Afford”The damage of subpar or failed AI initiatives runs deeper than mere budget overrun or missed KPIs. On the whole, they start depleting the culture, credibility, and the organisation’s ability to try again.

These are not isolated project risks but systemic outcomes of treating AI as a tech layer rather than a strategic capability. And over time, they destroy the foundation needed to deliver anything meaningful with AI.

The impact of the current AI adoption approaches vs the impact of a leaner approach to AI | Source: Authors

So, what does broken AI impact?

Opportunity Cost

Section titled “Opportunity Cost”Every resource tied up in a poorly scoped project could have gone into something else, a proven analytics enhancement, a core process improvement, or even a simpler automation that would’ve moved the needle. Time and talent are finite. Burning them on initiatives that have no real path to value doesn’t just slow down AI, but also slows down the business.

The most dangerous part is that these opportunity costs are rarely tracked. On paper, the project ” explored a use case.” In reality, it displaced efforts that could’ve delivered impact.

Organisational Fatigue

Section titled “Organisational Fatigue”When teams spend months building something that never launches, or gets sunsetted after one demo, the result is a slow, widespread disengagement. Data scientists feel like model developers for PowerPoint. Product teams stop prioritising AI integration because “nothing ever ships.” Business stakeholders grow sceptical and default to manual workarounds.

Data Quality Degradation

Section titled “Data Quality Degradation”AI surfaces data issues fast, but without a solid foundation, organisations often work around these problems instead of fixing them. Teams patch missing values, retrain on biased data, or overfit models to noise. This doesn’t just hurt one project, but creates a technical debt spiral where data issues compound over time.

Worse, these shortcuts rarely get documented. Future teams inherit flawed pipelines without context, making future models less reliable and harder to audit. Data that looks usable but can’t be trusted when it matters most.

Talent Exodus

Section titled “Talent Exodus”Good AI practitioners know the difference between experimentation and futility. When they’re asked to ship models without context, build solutions with vague objectives, or defend performance metrics no one understands, they eventually walk away. No one wants to be the engineer behind a system that’s never used.

And once word spreads that the organisation treats AI like a vanity project, it becomes harder to hire and retain the right talent. Smart people don’t stick around to build things that never go live.

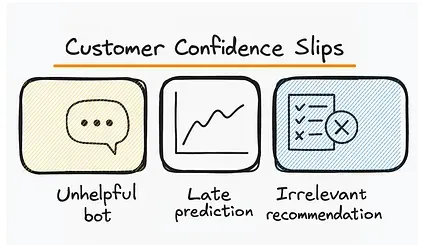

Customer Trust Erosion

Section titled “Customer Trust Erosion”When AI touches customer-facing experiences, the stakes are even higher. A chatbot that can’t solve real problems, a recommendation engine that gets it wrong, a predictive alert that triggers too late: all of these chip away at user trust. In some industries, that erosion isn’t just reputational, it’s regulatory.

Once customers learn to work around AI, they stop trusting anything that looks like it. That’s a complex perception to undo.

Can Older Frameworks Address Today’s AI Challenges

Section titled “Can Older Frameworks Address Today’s AI Challenges”Actually no! As we navigated the problems and their changing nature, the deduction is that there’s a need of new approach to stabilise AI adoptions.

Why older frameworks cannot address today’s AI challenges: Limitations of the earlier problem-solving technologies/approaches | Source: Authors

Going All In With AI: Not the Best Call Now

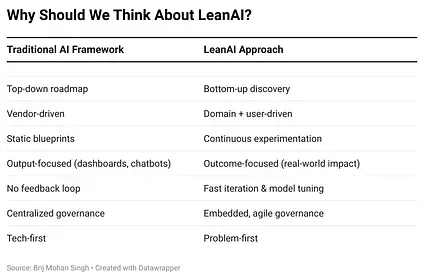

Section titled “Going All In With AI: Not the Best Call Now”What the AI world needs now is the equivalent of Agile and DevOps: a mindset built for adaptive delivery. A ‘Lean ’ approach.

Think of how the evolution of software development didn’t always look the way it does today. We forget how painful it once was: rigid roadmaps, late-stage testing, siloed teams, and massive releases that broke more than they built.

It took years and a lot of broken systems to move from monoliths to microservices, from waterfall to agile.

We need the same kind of shift that rewired the software world, one that recognises AI as a capability to build in smaller loops, focus on real-world feedback early, prioritise user value, and not just technical success. To treat software not as a project, but as a product.

A Marketing Instance

Section titled “A Marketing Instance”We don’t need bigger AI, we need smarter delivery and more impact. For instance, not every CMO would greenlight a $ 300K ad campaign without A/B testing messaging, creative, and audience targeting. You don’t flood every channel on Day 1, but test a few. A Facebook ad variant here, a landing page tweak there. You measure and iterate. You scale what converts.

So why should we treat AI any differently?

AI also deserves that same level of go-to-market discipline.

But here’s what often happens instead:

Companies load up on AI infrastructure, onboard expensive talent, and kick off grand initiatives, without first validating whether the business problem is even worth solving with AI. That’s like launching a global rebrand without customer insight. It burns resources fast and quietly underdelivers.

Let’s borrow from the smarter playbook.

Start with the Customer Problem, Not the Cool Tool

Section titled “Start with the Customer Problem, Not the Cool Tool”In GTMs, you don’t pick a channel because it’s trendy. You start with the goal: boost lead quality, improve retention, reduce churn, and then find the tactic that fits. AI should work the same way.

Before spinning up a model, zero in on the actual friction: Are sales cycles too long? Is churn high in a specific segment? Frame the challenge in human terms. Then evaluate whether AI is even the right lever. Sometimes, it isn’t.

Test Quickly, Not Perfectly

Section titled “Test Quickly, Not Perfectly”Marketing teams don’t build a campaign engine in one go. They run sprints. Test email headlines. Launch a microsite. The goal: learn before scaling.

AI teams should adopt the same tempo. Set time-boxed experiments with measurable impact goals. Think: ” Can this model reduce ticket resolution time by 20%?” and not ” Can we get it to 94% F1 score?”

Track Business Value, Not Just Model Metrics

Section titled “Track Business Value, Not Just Model Metrics”Just as a CTR doesn’t tell you customer lifetime value, a precision score won’t show how AI impacts revenue. What matters is business lift: higher NPS, faster onboarding, lower acquisition cost.

So swap your dashboards. Replace confusion matrices with outcomes. AI that boosts conversion by 10% is far more valuable than a model that’s 98% accurate on a synthetic dataset.

Learn Fast, Then Let Go

Section titled “Learn Fast, Then Let Go”Good marketers kill bad campaigns. Fast. If something isn’t converting, it doesn’t matter how pretty it looks. That same muscle needs to exist in AI efforts.

If a pilot doesn’t show traction in real conditions, don’t waste cycles trying to force it. Let it go. The win isn’t in saving the work, it’s in saving the business from waste.

Invest Where the Signal is Strong

Section titled “Invest Where the Signal is Strong”Once a marketing test proves repeatable impact, say, a campaign consistently boosts demos by 25%, you don’t hesitate. You scale. More spend, more channels, more support. It’s not a gamble.

Treat AI the same. When a solution drives results in one region or one workflow, double down selectively.

Not everything deserves to scale, just the winners. We are calling it the LeanAI Approach.

Thinking Lean

Section titled “Thinking Lean”

LeanAI isn’t a tech stack. It’s not a new framework to evaluate vendors. And it’s definitely not about hiring more ML engineers. It’s a way of thinking. It doesn’t downplay the power of advanced models, but redirects focus toward continuous delivery, feedback-oriented learning, and outcome-driven iteration. It forces teams to ask uncomfortable but essential questions early:

What signal are we optimising for?

What behaviour needs to change?

What does success look like in the hands of a user, not just in a benchmark?

LeanAI reframes AI work as a flow, not a feature. A repeatable capability over a one-time win. It borrows from the principles that made DevOps and Agile work in the software world: ship smaller, learn faster, validate earlier, and adapt continuously.

Only now, the stakes are higher, and the systems are probabilistic.

MD101 Support ☎️

Section titled “MD101 Support ☎️”If you have any queries about the piece, feel free to connect with the author(s). Or feel free to connect with the MD101 team directly at community@moderndata101.com 🧡

Author Connect

Section titled “Author Connect”

Find me on LinkedIn 💬

Find me on LinkedIn 💬

From MD101 team 🧡

🧡 Join 1500+ on The State of Data Products

Section titled “🧡 Join 1500+ on The State of Data Products”Introducing The Catalyst, a special edition of The State of Data Products that compiles insights around the ups and downs in the data and AI arena that fast-tracked change, innovation, and impact.

📘 The Catalyst, first release is our annual wrap-up that compiles the most interesting moments of 2025 in terms of developments in AI & data products, with expert insights from across the industry.

Some Focal Topics

Section titled “Some Focal Topics”- The gap in AI readiness for enterprises

- The shift towards context engineering

- Addressing governance debt with a focus on lineage gaps

- Revisiting fundamentals for better alignment b/w business vision and AI.