saastr.com - Our 20+ AI Agents and Their Moats Real But Weak

We had a moment last month that should worry every AI agent startup. Or anyone building AI agents.

We were deploying Agentforce – our newest sales agent – to follow up with 1,000+ leads our team had ghosted. We needed to train it on our messaging, our tone, our process. The kind of thing that generally takes weeks or even months, with the help of a Forward Deployed Engineer.

Instead, we just copied the prompts from another outbound AI tool, changed a few details, and it just … worked.

Not “kind of worked.” Not “worked after heavy modification.” Just… worked. And very well.

Same quality. Same personalization. Same results (72% open rate, if you’re counting).

If you’re building or investing in AI agents, this should make you uncomfortable.

AI Agent Moats May Be Weaker Than Traditional SaaS. They Are For Us

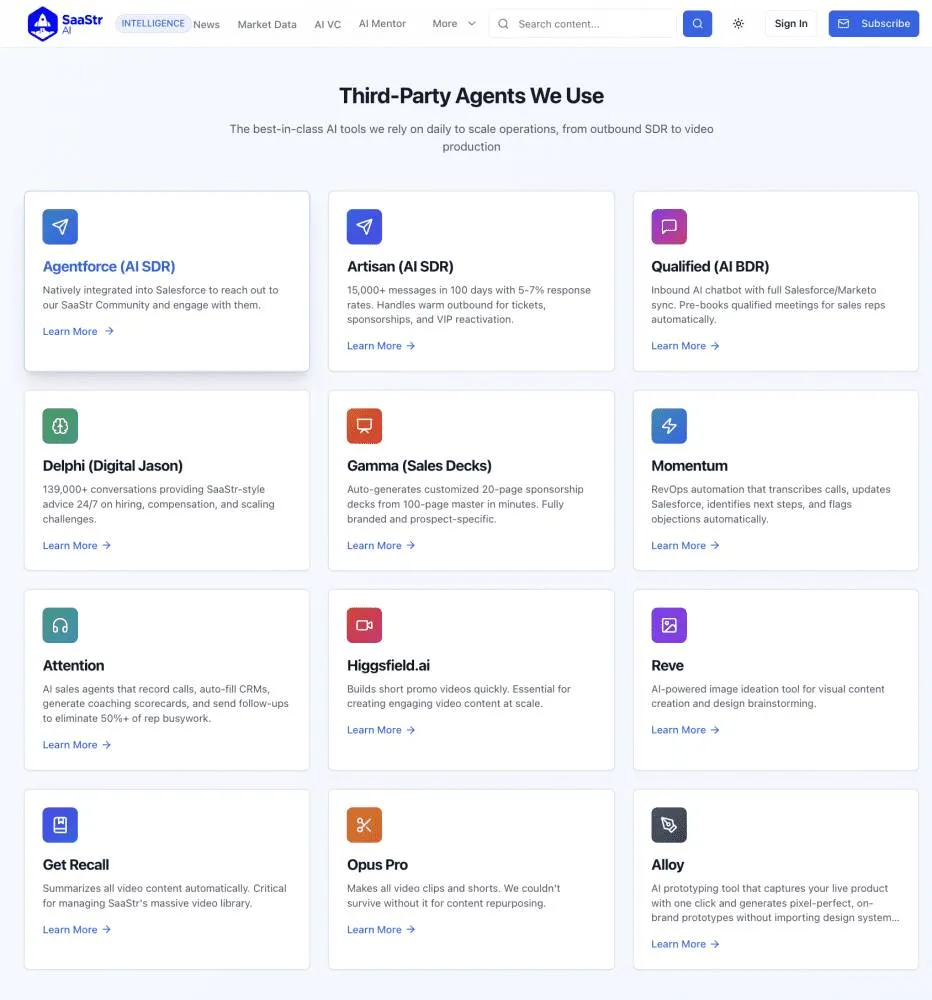

Section titled “AI Agent Moats May Be Weaker Than Traditional SaaS. They Are For Us”We now run 20+ AI agents across our entire GTM stack. Different vendors, different use cases, different price points. Some we coded ourselves. Some are specialized platforms. Some are from incumbents like Salesforce.

And here’s what six months of deploying agents has taught me: the differentiation between AI agents is narrower than you might think.

Yes, there are differences:

- Some have better UX

- Some integrate more natively with existing tools

- Some have more specialized features

- Some have better deliverability infrastructure

But the core intelligence? The actual AI work? If you train them well, the leaders all work well. They’re all good. They all use basically the same LLMs. And you can basically use the same prompts, and same training, with all similar AI agents.

The Copy-Paste Moment

Section titled “The Copy-Paste Moment”Let me walk you through what actually happened with Agentforce.

One of our outbound AI agents had been running for months. We’d spent considerable time training it:

- Tone of voice for different audiences

- Proof points for different products

- When to be aggressive vs. consultative

- How to handle multi-threaded questions

- Edge cases and failure modes

All of it documented in detailed prompt instructions. Hundreds of words of carefully tuned guidance.

When Agentforce came along, we opened up Agent A’s prompt builder. Selected all. Copied. Opened Agentforce’s prompt builder. Pasted. Changed “outbound cold prospecting” to “following up with warm leads who raised their hand.” Changed a few product-specific details.

Hit save.

It worked immediately.

Not just “worked” as in “didn’t crash.” Worked as in: generated emails that were essentially indistinguishable in quality from Agent A. Understood context. Personalized appropriately. Maintained our voice.

Why This Matters for AI Agent Companies

Section titled “Why This Matters for AI Agent Companies”If I can copy-paste prompts between completely different AI agent platforms – from a startup to Salesforce Agentforce – and get comparable results, what’s actually defensible?

The answer is uncomfortable: not much.

Here’s what I initially thought would be moats:

“Proprietary training data” – Nope. Every agent can ingest our Salesforce data, our website, our past emails. The underlying LLMs (Claude, GPT-4, etc.) are so good that the incremental value of “proprietary training” is minimal.

“Custom models” – Maybe for some use cases, but for GTM? The base models are already excellent at sales and marketing copy. Fine-tuning helps marginally, but copy-paste prompts help almost as much.

“Unique workflows” – This matters more, but it’s replicable. Once I understood how to structure campaigns in one tool, I could recreate similar workflows in others.

“Better AI” – Everyone’s using the same underlying LLMs. The wrapper matters less than people think.

What actually creates defensibility:

Network effects – If your agent gets smarter from everyone’s data, not just mine Integration depth – Native Salesforce integration is genuinely better than bolted-on Specialized infrastructure – Deliverability, compliance, domain management Workflow lock-in – Once I have 20 campaigns running, migration cost is real Speed of innovation – Shipping features faster than prompts can replicate them

Notice what’s missing from that list? The actual AI quality.

The Universal Concepts Across Agents

Section titled “The Universal Concepts Across Agents”After deploying 20+ agents, here’s what somewhat surprised us: the same concepts work everywhere.

Whether we’re training an outbound agent, an inbound agent, a support agent, or a marketing agent, I’m doing roughly the same things:

- Define the goal clearly – “Book meetings” vs “Answer questions” vs “Generate leads”

- Provide context about our business – Ingest docs, websites, past conversations

- Set tone and voice – Professional but friendly, data-driven, etc.

- Define boundaries – When to escalate to humans, what not to say

- Give examples – Good emails, bad emails, edge cases

- Iterate based on results – Monitor, refine, repeat

The UI looks different. The terminology varies (some call it “coaching,” others “instructions,” others “system prompts”). But it’s fundamentally the same process.

Which means once you learn one agent deeply, you can deploy others frighteningly fast.

What This Means for Startups

Section titled “What This Means for Startups”If you’re building an AI agent company, this should be sobering.

Your AI isn’t your moat. Your AI is table stakes.

The companies that will win aren’t those with the “best AI” (whatever that means). They’ll be the ones that:

- Go vertical – Don’t build “an AI sales agent.” Build “the AI SDR for enterprise SaaS selling to IT buyers.” Depth beats breadth.

- Build network effects – Can your agent get smarter from aggregate customer data? If not, you’re just a wrapper.

- Own infrastructure – Email deliverability, domain reputation, compliance – these are real moats. They’re boring, but they’re defensible.

- Integrate deeply – Native integrations with Salesforce, HubSpot, etc. are genuinely better than OAuth connections. Fight for them.

- Move insanely fast – If I can copy your prompts, you need to ship features faster than I can replicate them manually.

- Create lock-in through data – Once I have six months of campaign data in your platform, migration costs are real. But you have to get me to six months first.

The “we have better AI” story doesn’t cut it anymore. Everyone has good AI. The question is what else you have.

What This Means for Buyers

Section titled “What This Means for Buyers”If you’re buying AI agents (hi, that’s me), this is actually great news.

You’re not as locked in as you might think. If a vendor isn’t performing, you can likely replicate their functionality elsewhere with relatively low switching costs. Your prompts, your training, your learnings – they’re portable.

Do bake-offs. But not 10 at once (seriously, don’t do that). Pick two vendors. Give them the same prompts. See who performs better. The delta might be smaller than you think.

Negotiate hard. These companies are raising at wild valuations based on “proprietary AI” that’s less proprietary than they claim. You have more leverage than you think.

Focus on non-AI differentiation. Better deliverability? Native integrations? Superior onboarding? Those matter more than “we use GPT-5 instead of Claude.”

Expect commoditization, or at least, rough parity. Prices may come down. Features will converge. Plan accordingly.

The Exception: Truly Specialized Agents

Section titled “The Exception: Truly Specialized Agents”There’s one major caveat to everything I just said: highly specialized agents with unique workflows.

Our inbound agent (Qualified) does things that would be extremely hard to replicate elsewhere:

- Real-time website visitor tracking

- Intent signal aggregation

- Complex routing logic

- Integration with our calendar system

- Pre-meeting context aggregation

Could we theoretically copy-paste prompts and rebuild this? Maybe. But the workflow and infrastructure around it? That’s genuinely differentiated.

Same with specialized agents that have built unique datasets or trained custom models for narrow use cases. A medical coding agent or legal contract review agent with years of specialized training? That’s harder to replicate.

But generic GTM agents? Sales, marketing, support? The moats are weak.

What We’re Doing About It

Section titled “What We’re Doing About It”Knowing this, here’s how we’re thinking about our agent stack:

Go deep on specialized tools. We use different platforms for outbound, inbound, and warm follow-up because each does something uniquely well beyond just the AI.

Accept some redundancy. Yes, we could consolidate to one platform. But the switching costs are low enough that we optimize for best-in-class per use case.

Keep prompts portable. We maintain a library of our best prompts, tone guides, and training materials. They work across platforms with minimal modification.

Evaluate constantly. Every quarter, we ask: is this agent still the best option? The bar for switching is lower than it used to be.

Build relationships with founders. When moats are weak, the quality of the team building the product matters more. We want partners who ship fast and listen.

AI Agents Are Becoming Infrastructure

Section titled “AI Agents Are Becoming Infrastructure”Here’s what I think is really happening: AI agents are becoming infrastructure.

Like email service providers. Or payment processors. Or cloud hosting.

You still need them. They’re insanely valuable. But the differentiation is increasingly about reliability, integration, and service – not about the core technology.

The “AI” part is commoditizing, or at least, parity is evolving rapidly, at least to a certain level of core functionality. What’s not commoditizing:

- Distribution

- Integration

- Domain expertise

- Customer success

- Speed of innovation

If you’re betting on AI agent startups (as investors or founders), bet on those dimensions. Not on “we have better AI.”

Prompts Are Portable

Section titled “Prompts Are Portable”We just deployed an enterprise AI agent from Salesforce using copy-pasted prompts from a two-year-old startup. Both work great. Both have pros and cons. Neither has a strong AI moat on its own.

That’s the reality of 2026.

The winners won’t be determined by who has the best underlying AI. They’ll be determined by who builds the best everything else around that AI.

For buyers, this is liberating. You’re not locked in. You can switch. You can negotiate.

For builders, this is terrifying. Your AI moat is weak. What else do you have?

The Cambrian explosion of AI agents is real. The competition is fierce. And the moats are shallower than anyone wants to admit.

Choose your vendors accordingly.

Running 20+ AI agents across GTM? Yeah, it’s a lot. We’ll be sharing more detailed results and learnings at SaaStr AI London (December 1-2) and in future posts. Follow along at saastr.ai/agents.