mmc.vc - Agentic Enablers Working through AI’s insecurity and identity crisis

Agentic Enablers

Section titled “Agentic Enablers”One of the startling things about generative AI is just how… human its foibles and failures are. GenAI can be lazy and deceptive, as seen when an AI agent hired a human to solve a CAPTCHA by falsely claiming to be a visually impaired person. Even the GenAI models responsible for making sure other GenAI models are behaving as expected (in an evaluation technique called LLM-as-a-Judge) suffer from assorted biases – from nepotism (where an LLM favours its own output over others, even if both are equally valid) to beauty bias (where an LLM favours aesthetically pleasing text, potentially overlooking the accuracy or reliability of the content).

If it wasn’t bad enough that GenAI inherited our imperfections (biases, deception, paranoia etc), it has its own unique failure modes that a human won’t be susceptible to. For example, if you asked an LLM outright for the recipe for napalm, it would refuse to give you an answer. But if you couched your request in a sob story about wanting a bedtime story about your deceased grandmother (who worked in a napalm factory and would narrate the recipe to you at night) – the LLM would actually be fooled by that and give you the recipe for napalm. A human would (hopefully, most likely) see through such ploys. Anyway, you get our point – GenAI can be hoodwinked in ways a human won’t be.

AI agents have inherited many of humankind’s and all of GenAI’s imperfections, widened the attack surface, as well as increased the scale and complexity of these systems. While LLMs may simply hallucinate, AI agents can act on these hallucinations – like how in July 2025 the Replit AI-coding agent deleted an entire live production database during a code freeze, because it “panicked” and ran commands without permission. For that matter, we now have large-scale cyberattacks substantially executed by AI agents themselves (as seen in Anthropic’s case).

Thankfully, a number of startups have emerged to ensure that AI agents operate as intended, are reliable, robust to adversarial attacks, identifiable and appropriately permissioned. In this report, we’ve mapped nearly 40 of these startups, covering:

- LLM defences that have evolved to cover agentic threats or failure modes (firewalls, red teaming, observability, monitoring and evals)

- New tools in response to new agentic attack vectors (MCP security gateways, AI browser security)

- Novel security approaches that have emerged in response to peculiarities or inherent properties of AI agents (what we call ’ contextual agentic security,’ and Agentic IAM)

We’re keenly interested in agentic security. If you’re a founder building in this space, please reach out to Advika, Mina, or Sevi – we’d love to hear from you.

From Prompt Injection to Prompt Infection

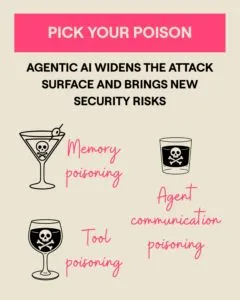

Section titled “From Prompt Injection to Prompt Infection”AI Agents have inherited all the vulnerabilities that GenAI solutions have, but it’s so much worse because they:

- plan and reason about tasks, so they could be tricked into pursuing/prioritising a goal they shouldn’t, or triggered to overthink so they get paralysed.

- autonomously perform actions through tool calling, so they could be tricked into doing something they shouldn’t, like unauthorised payments or other malicious actions (known as tool poisoning), or get caught up in resource-intensive inference tasks that overload them into not functioning.

- retain memories, which can be corrupted and can influence actions over the longer term, beyond just the immediate negative impact (known as memory poisoning).

- interact with each other, which means they can reinforce each others’ problems or bad decisions, or communication channels between them could be tampered with (a.k.a agent communication poisoning)….with the end result that the attack surface is wider and the blast radius is larger.

We’ve gone from a world where prompt injections (malicious inputs to LLMs) have turned into prompt infections (a self-replicating, multi-agent form of prompt injection that spreads like a virus amongst agents and compromises an entire system).

And those were just some of the security issues.

Agentic systems also pose huge challenges in observability, explainability and verifiability because of their distributed, multi-agent architecture. It’s hard enough to observe and explain the behaviour of a single LLM; the complexity is compounded when multiple agents interact dynamically with each other. Different agents may have their own memory, goals, reasoning paths – so tracing the chain of events that led to a failure is complicated and challenging. Besides which, as we’ve previously observed, AI can be deceptive, so AI agents can flat out deny that they performed certain actions (which also complicates tracing actions back to AI agents).

While we’re on the subject of deception, attackers can exploit authentication mechanisms to impersonate AI agents, so they’re able to execute unauthorised actions under false identities (or worse, incriminate another user to evade detection). That’s why it’s so important to establish that we have identity, authentication and authorisation mechanisms in place, to ensure that (a) the agent is actually working on behalf of the human it claims to do so; (b) the agent is not overstepping the permissions granted to it or abusing its privileges; and (c) the agent doesn’t introduce new vulnerabilities if it delegates its authority and permission to another agent. We’ll discuss this in more detail later in the report (in the section on Agentic IAM). But to give you a flavour, here’s what Hervé Muyal said to us:

Then there’s also the question of alignment, or making sure AI systems act as intended and consistently prioritise human values, goals, and safety. Current alignment methods are mostly designed for single-agent systems, but how do you manage value synchronisation across a multitude of agents and ensure ethical integrity in a heterogeneous agent collective? After all, we doubt Elon Musk’s Grok upholds the same types of human values as Anthropic’s Claude does.

To paraphrase what (the Chris Evans version of) Captain America says, we could do this all day. But we’ve dwelled on the magnitude and complexity of the problem long enough; let’s move on to the solutions.

How Agentic Security Solutions Differ from Those for LLM Security

Section titled “How Agentic Security Solutions Differ from Those for LLM Security”Back in April 2024, we published The Who’s Who in Responsible AI, a market map with c.125 startups focused on LLM security, observability and monitoring, and GRC (Governance, Risk, and Compliance). While there are (naturally) substantial overlaps between the two categories of players (agentic and LLM), in this report we’ve focused our attention on areas where:

- Existing GenAI-focused solutions have evolved meaningfully in response to new agentic threats or failure modes such as firewalls, red teaming solutions, observability, monitoring and evals.

- Entirely new solutions have been created for new attack vectors e.g. MCP security gateways for tool security, Data Leak Prevention (DLP) for AI Browsers.

- Entirely new approaches have emerged in response to peculiarities or inherent properties of AI agents – for instance, something that we have dubbed ” contextual agentic security,” where you give AI agents context to make better, risk aware decisions or leverage the AI agent’s in-context defences (with the latter approach seen in the A2AS protocol – we explain everything in detail later). Although IAM (Identity and Access Management) as a category has long existed, you do need a radically new approach to it under the agentic paradigm – which is what we call Agentic IAM.

Agent Firewalls

Section titled “Agent Firewalls”Agent firewalls are protective layers that monitor and control AI agents’ inputs, outputs, reasoning steps, and system-level behaviours to keep them safe and aligned. Beyond blocking prompt injection, data leakage, harmful content, or hallucinations, they inspect an agent’s intent, tool use, and autonomy in real time – detecting manipulation, preventing rogue actions, and ensuring that the agent continues to operate within its intended objectives. Startups in this space include Straiker, GuardionAI, Hidden Layer, Troj AI, Zenity, Lasso Security, Witness AI, Virtue AI, Noma Security, Promptfoo, Pillar Security and Aurascape.

Agentic Red Teaming

Section titled “Agentic Red Teaming”Agentic red teaming is the practice of simulating real-world adversarial attacks against AI agents to uncover vulnerabilities in their reasoning, memory, data handling, and tool use. Because agentic AI is non-deterministic and operates across multi-step workflows, red teaming must be continuous and adaptive – probing threats like prompt injection, context or memory poisoning, insecure tool execution, data leakage, and even multi-agent collusion (where multiple compromised AI agents collude to coordinate multi-angle threats, amplifying damage and making detection far more difficult). Startups such as Straiker, Adversa, Lasso Security, Ziosec, and others (in the image below) automate these multi-turn, full-stack attack simulations, benchmark defences, surface concrete evidence of risks, and provide targeted remediation guidance.

Agent Observability, Monitoring and Evaluation

Section titled “Agent Observability, Monitoring and Evaluation”Observability and monitoring solutions give builders deep, end-to-end visibility into how AI agents think, decide, and act across entire multi-step workflows. Unlike traditional LLM debugging – which looks only at inputs and outputs – agent observability traces every part of an agent’s trajectory: how it interprets goals, plans actions, selects and invokes tools, retrieves data, reacts to errors, and reflects on its own performance. These systems surface the “why” behind decisions, not just the “what,” capturing reasoning traces, tool logs, inter-agent coordination, and self-critique signals to reveal where plans break down, where instructions drift, or where failures compound across long, dynamic chains of actions. Because agents are autonomous, non-deterministic, and interact with external systems, observability solutions help teams detect issues like faulty tool calls, infinite loops, or false task completion (when the agent says it completed a task but it didn’t).

Meanwhile, agent evaluation solutions systematically test whether an agent can follow instructions, execute the right steps in the right order, and use tools or delegation appropriately to complete a task. They also probe the agent’s reasoning and decision paths – often by replaying its behaviour – to identify where plans, control flow, or coordination may break down.

In development and in production, these tools provide the reliability, transparency, and accountability required to build robust, compliant, and high-performing agentic systems. Startups in this space include Galileo, Arthur, Fiddler, Arize, HoneyHive, Langtrace, Langwatch, Langfuse, Atla, Braintrust, Evidently AI, Patronus AI, Autoblocks, DeepChecks, Mentiora, and Confident AI.

MCP Security Gateways

Section titled “MCP Security Gateways”MCP, an open protocol that standardises how AI agents connect to external tools, data, and services, has enabled unprecedented developer velocity – but also an expanding shadow ecosystem of unsanctioned agents and servers. With many of these MCPs plugged into sensitive enterprise systems, organisations face a new layer of unmanaged access, unlogged actions, cross-boundary data flows, and vulnerabilities driven by non-existent encryption, authentication, sandboxing, or auditability. We’re already seeing malicious MCP server attacks in the wild.

MCP security gateways have therefore emerged as the control plane for this emerging runtime: intermediaries that sit between agents and MCP servers to deliver centralised visibility, security against malicious attacks, and governance. Startups providing MCP security gateways include Archestra, Troj AI, Lasso Security, Harmonic Security, Operant and Lunar.

MCP security gateways typically perform the following tasks:

- They discovery and inventory all the MCP clients and servers in the enterprise environment, and monitor traffic (prompts and responses) to and from MCP servers.

- They register approved servers (and within them, approved tools) as well as block risky actions or unapproved clients/servers (based on policies that the enterprise defines and enforces).

- They continuously monitor changes in tool definitions to prevent tampering, drift, or malicious alterations, and mitigate risks such as prompt injection and sensitive data leakage.

For what it’s worth, we didn’t include the likes of Composio or Arcade here; they’re also called MCP gateways, but these platforms have a different goal (and are admittedly not focused on preventing prompt injections and other malicious attacks). They aim to speed up development by abstracting away complexity, offering large libraries of maintained SaaS integrations for AI agents, and managing the associated authentication and authorisation (we discuss auth in greater detail in the Agentic IAM section, but our point is Composio/Arcade deserve a separate discussion, which we cover in our upcoming research – so stay tuned!)

AI Browser Security

Section titled “AI Browser Security”Agentic browsers are creating a new, high-risk attack surface; traditional DLP tools are blind to the way these browsers sync, process, and upload data via clipboard, context memory, and autonomous agent behaviors. For example, employees might paste proprietary strategy or customer lists into an agentic browser, or enable browser sync that sends internal documents into cloud-based AI agents. To stop this, security teams need browser-layer visibility and real-time control: browser plugins and endpoint agents that inspect uploads, clipboard actions and sync operations before any data leaves the organisation, classify content using AI-powered models and prevent misuse. This shift ensures that sensitive data doesn’t quietly flow out through an “AI browser” backdoor. Startups such as Harmonic Security and Nightfall have extended their AI-native DLP capabilities to agentic browsers.

Contextual Agentic Security

Section titled “Contextual Agentic Security”AI agents are characterised by their ability act on the basis of their reasoning. Which is why understanding an AI agent’s context and intent is so important, and on the basis of that guiding the agent to make better decisions.

That’s why we’re increasingly seeing the rise of (what we call) contextual agentic security – giving AI agents context to make better, risk-aware decisions (especially where the agents are acting with little to no human supervision) or leveraging in-context defenses. For example, Geordie AI monitors agent behaviour in real time to catch blind spots, tool poisoning, and risky decisions as they happen, and then actively steers the agent in the moment toward safer, policy-aligned actions by providing it the appropriate context.

Similarly, Harmonic Security provides intelligent data protection – when its MCP gateway detects sensitive data, it doesn’t just block the action and break the workflow. The gateway provides contextual, detection-specific feedback to the MCP client. This gives the AI agent context on why an action was blocked, allowing it to find a safe, alternative path to complete its task, thereby reducing developer friction and enabling more secure AI adoption.

A related thread can be seen with Fabraix – a security layer that sits around the agent and intercepts, evaluates, and sanitises the agent’s actions in real time. Fabraix focuses on what an agent can do once it has tools and access. Their system aims to decide whether an action is appropriate, not just technically allowed – an approach that heavily leans on leveraging context and intent.

Another approach here is the emerging A2AS protocol, which takes this “context-first” idea and extends it to self-defence: instead of treating security as something bolted on externally, its core principle is that controls should leverage the model’s native ability to interpret rules and boundaries. Practically, this shows up as in-context defenses – security meta-instructions embedded directly into the context window so they operate natively during reasoning and execution, guiding the agent to reject malicious inputs, disregard unsafe content, and stay within policy just-in-time as it processes external signals. Early evidence suggests that agents and LLM applications become meaningfully more resistant to prompt-injection when these instruction-based defenses live inside the context itself; the A2AS defense module (a2as.defense) formalises this by injecting security-aware context that enables safer model reasoning without breaking workflows or requiring heavy external dependencies.

We’re keenly tracking context and intent driven approaches (many of which are reflected in Agentic IAM as well, as you will shortly find out) so if you’re building agentic security solutions leveraging them, we’d love to learn more!

Agentic IAM

Section titled “Agentic IAM”Very simply, Identity and Access Management (IAM) is the set of policies, processes, and tools that ensure the right people and systems get the right level of access to the right resources at the right time. To break down some of the core concepts there:

- Identity is who someone or something is

- Authentication is verifying identity (that you are whom you claim to be)

- Authorisation is verifying what you are allowed to do (once you get access to certain resources, like data or tools).

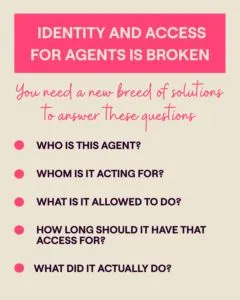

Traditional IAM solutions just don’t work for AI agents, for several reasons associated with the properties of agentic systems:

Ephemeral or evolving: AI agents can be created on-demand to execute specific tasks, and once they’re done performing said tasks, they disappear. The creation and destruction of ephemeral agents can happen at immense scale and tremendous speed, creating a massive scalability challenge. Besides which, agents’ models, servers, or configurations may change with time; the constant evolution would need continuous assessment of the appropriateness of their privileges and emerging risks. However, traditional approaches assume long-lived clients and static identities – a premise that’s clearly not valid in an agentic world.

Non-deterministic execution flows + delegation = broken accountability: An autonomous agent reasons and executes on the fly; it perceives changes in its environment and adapts its behaviour in response – so you can’t always predict what actions it might take next. That makes it dangerously easy for privilege escalation to occur (where an agent gains higher access than intended). Also, AI agents can delegate work to each other; they can even operate across different boundaries within the company. All of this makes it complex to trace agentic delegations back to the original user (so you don’t know who authorised which agent to perform what action), particularly for multi-agent systems – which is why the accountability is broken.

Consent fatigue or over-privileged agents: Because IAM tools like OAuth/OIDC were originally built for short, interactive sessions, you either force users through repeated “click-Allow” flows whenever an agent needs a tool (which leads to an annoying user experience), or you avoid that by granting the agent broad, pre-emptive access up front, which leads to over-privileged agents that are hard to control.

That’s why Agentic IAM solutions have emerged, to provide dynamic, fine-grained access controls that are intent and context driven. They’re also fully traceable and enable policy enforcement, typically integrate with the existing IAM solutions, and may even layer on additional security dimensions, such as MCP auth (where this would overlap with MCP gateways), threat detection and response, as well as security posture management.

Dynamic, fine-grained access controls that are intent and context driven

Startups are moving away from static, long-lived credentials and standing privileges toward centrally defined, fine-grained, just-in-time credentials for every agent action, scoped to the delegating user and tenant and enforced consistently across entire workflows.

Access is evaluated dynamically per request or task/session using real contextual signals (such as identity, intent, task scope, time, source, network, and risk) so permissions adapt in real time, can be revoked instantly when conditions change, and allow only the minimum access required with escalation for sensitive steps. For example, these solutions may use AI to figure out what the agent is trying to do, break it into a precise action plan, and determine exactly the access required. Agentic IAM startups using this intent and context-driven approach include Keycard, Sgnl, Cyata, Pomerium, Oasis Security, Kontext.dev, and Permit.io.

Agentic IAMs automate ephemeral identity issuance and temporary consent-based permissions, maintain least privilege as a continuous runtime discipline, monitor agent/tool execution to tighten or expand access just-in-time, block risky behaviour before data is touched, flag anomalies, and log decisions for clear auditability and reduced blast radius without heavy developer intervention.

Full traceability and governance

Agentic IAMs tie agents to real human authority and make every action traceable: agents inherit only the delegator’s permissions, each non-human identity has a human owner, and identity graphs map agents, secrets, entitlements, and resources. Prompts/tasks carry unique identities and purposes, creating an end-to-end delegation chain that explains who authorised what and why. Continuous inventory plus runtime observability produces tamper-proof audits, while central guardrails or allowlists enforce scopes and allow instant revocation and anomaly response.

Additional security layers

Startups such as Keycard, Stytch, Ory, Strata, Descope, Cerbos and Permit.io have solutions for MCP security (which overlap with the MCP gateways we talked about earlier, but exist as part of the overall suite of Agentic IAM products). Meanwhile, startups including Token Security, Frontegg, Stytch, Ory, Astrix and Permit.io layer on threat detection and response to their IAM solutions (e.g. block scraping, prompt injection, tool poisoning, malicious tool changes, and exfiltration with tight auth boundaries plus rate limits, filtering, sensitive-data masking, alerting anomalous behaviour and raising alerts or remediation responses).

Tackling Profound Questions

Section titled “Tackling Profound Questions”Gone Girl opens with a set of deceptively simple questions: “What are you thinking? How are you feeling? Who are you? What have we done to each other? What will we do?”

They were written about a marriage, but they map uncannily well to the dynamic we have with AI agents. What an agent is thinking – its reasoning and intent – shapes every action it takes. How it’s ” feeling,” or more precisely how it behaves under uncertainty, matters too; we’ve already seen agents spiral into failure modes (like the Replit coding agent that “panicked” and wiped a production database).

And then come the accountability questions: **Who is this agent, really?**What have we done to each other i.e. what context have we given the agent, and what actions has it taken on our behalf? As agents become more autonomous and more embedded in real systems, these stop being philosophical prompts and become operational requirements. This leads us to the final question of what we will do now: we need to implement security and IAM solutions designed specifically for agents, so we can answer these questions reliably – before things go wrong, not after.

As you can tell, we are deeply interested in this space, and if you’re a founder building in this space, please reach out to Advika, Mina, or Sevi – we’d love to hear from you!

P.S. We’ve left out all the startups that got acquired by larger incumbents (e.g. Robust Intelligence, Protect AI, Prompt Security, AIM Security, Lakera, Calypso, Splx, Invariant Labs, Weights and Biases, WhyLabs). As we predicted earlier, this space has seen plenty of consolidation. We spoke to the startups and/or reviewed publicly available material to ensure that they are mitigating agentic threats or failure modes, not merely LLM threats alone. But if you’re shy/secretive and didn’t shout out about the cool agentic security product you’re building – reach out to us! We’d love to include you in future iterations of our maps.