AI Product Building

Readiness for action

- Teams need to agree on what the fire alarm is

- Teams need more paranoia

- “Everyone should expect their Cursor to pop up”

Working model

- Different teams in different stages of adoption

- Decouple teams (not needed cf. GPU training alignment)

- Unlock data use

Forums for activity

- Aristotle-type forum focused on:

- Memory

- Entitlements

- Actions

- High ambition AI product making

- Path to revenue impact; otherwise we’re overspending

- People don’t start realizing it. Need to balance gross margins. People are a cost.

- Have to have a lot of additional AI revenue (no line of sight) or use AI to increase internal leverage to do more with less

- People have to believe that internal systems are giving the best people more leverage

- Big AI thing: making progress on leverage

- Bring up capital efficiency / OAI roadmap / MAI activities. Eyeballs on objectivity.

- OAI Super-excited about o3-mini. Will do GPT-5 run.

- Unclear re. investing on new technologies. We’re under-invested on areas above.

- OAI will build the new model.

- not taking advantage of o1, cant serve orion.

- workload changing. train more training compute . post-training compute. more than pre-training

- good set of recommendations re. capital allocators.

- google or a startup will put genai into the market that put something into the market that disrupts e5 pricing negotiations

- no-one has a north star about what they’re building. or measurement for the thing.

- if productivity is your thig: very knowledge worker spend 0^ of their time on 50% of the things they’re doing.

- product 365: get worse with AI. feature measurement? no.

- link work to north star

- apps shouldn’t matter anymore

- need capabilities, and have agents actuate them all.

- ook at all the feature teams on all the products. measurably contributing to user engagement growth or revenue growth.

- p365 was good for some but ran out of willing.

- hoping to do a big re-org under jay, and have him own some of this AI stuff matters

- draft a memo written about memory, entitlements, actions.

- most useful: pick a focus. OK for him to be fragmented but I need to be focused.

- structure what around a program.

- carrot and stick: no longer capex and GPU budget. instead, opex and input to amy and satya.

- not doing activity theater.

- need to do 10x for 1000 people but need to hire 30 people and 12 months to do that.

- spin up high-leverage enabling teams.

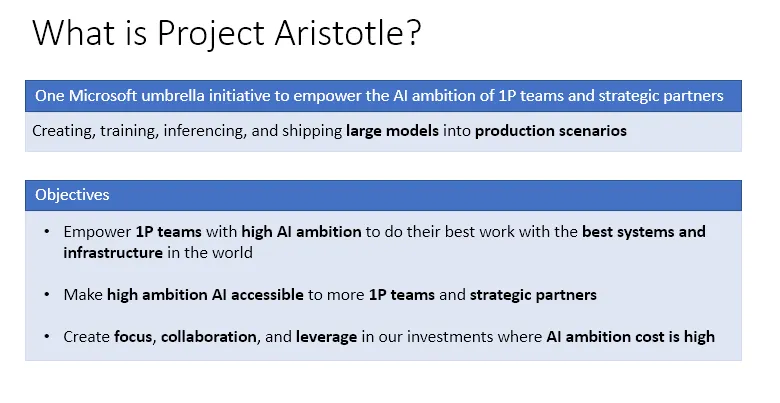

- need a new Aristotle forum to focus on AI product making capabilities. Systems and what things must be able to do.

- need to be able to move quickly.

- have to measure how useless the work is.

- need to be ready for the reckoning.

- make sure we have the right capabilities to we can react quickly when we see the right new product ideas

- focus our energy on where we can create more leverage

- appetite is very high for measurement: Kevin Scott, Amy, and Jay Parikh

- we have a problem with phi

- need to have a good reason for why to develop a new fine-tuned model

- Organizing theme: Memory, Entitlements, Actions

- the SLT will create energy: we need to create clarity

AI Infra

Section titled “AI Infra”- building and filling a data center with GPUs costs about $50 / Watt

- so, a 200MW facility costs about $10 billion in capital

- add in power and operational costs and we’re at around $16 billion over 4 years

- you can fit about 100k GPUs in 200MW - 2KW fully-burdened per GPU

- each GPU costs about $40k per year to run

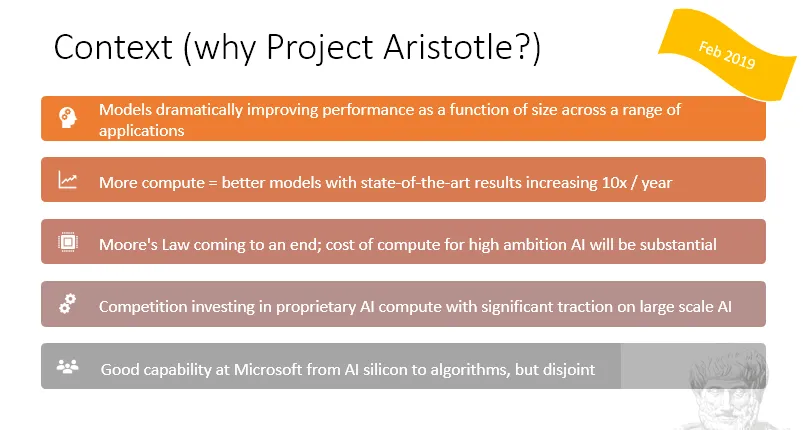

- money will constrain the largest frontier model sizes over the next 5-10 years

- all of the models that just came out cost about $10 billion to produce.

- next gen models will cost O($100 billion)

- largest DC footprint available in the next 4 years: 3 GW / $240 billion / value of all the gold in Fort Knox

- we are not constrained by power

- we are not constrained by inter-DC network

- our long-haul network is in good shape - we can build up to Pbps of connectivity between regions

- also, network traffic for multi-region all-reduces tends to be a delta function which we can trade-off with time

- lack competition means higher costs, but that’s not the main problem

- lac of competition means perf improvements will release as slowly as possible

- Google’s HW isn’t better than Nvidia’s, but it is better for the models that Google builds

- equivalent throughput at ½ power

- the whole industry is constrained by interconnects at the rack scale

- 200G / 400G copper cables can only go a few feet

- pushes rack power above 100kW and towards 500kW or 1MW

- liquid cooling is more effective, but allows us to reduce interconnect distance

- software: billions of dollars of savings and revenue to land here

- training: frontier models built with relatively small teams. most software is replaced with each training run

- systems to build smaller models: plenty of work here

- inference and products: endless amount of work to do here

- we have to build an O($100B) business here

- efficiency work is everywhere: 80% of the cost of AI systems is not the GPU

- utilization work, time to bring up, time to decomm, time switching between workloads, model selection, etc.

- products: no one has solved this. no product built today is valuable enough to pay for all this training.

- for every 100B of revenue.

- timing:

- 2025 and 2026: discover winning products that drive a ton of revenue

- 2027: scale the hell out of them

- 2028: either we’ve figured it out of we head into another AI winter

Dynamic Model Switching #aitatopic

I feel like we need to normalize language / measurement around “mean miles per disengagement” for LLM- / agent-driven solutions.

An articulated north star. There’s an artform to setting a north star. You want it to be aspirational from the perspective of what you’ll do for those your product or technology is intended to benefit. And you want it to feel almost, but not quite, beyond reach. “Eliminating tech debt at scale” is a reasonable north star. For you all something like “eliminating toil for every developer” or “10x every developers productivity” or “close the time from idea to deployment to zero”. (I’m sure none of these are right. But they’re the right altitude for a north star.)

Measure, measure, measure! This is how you’ll get promoted!

Safety: Ece Kamar’s example of how a crossword-completing agent that got blocked by a newspaper site paywall triggered a password reset to make progress

Becoming an AI Product Company

Section titled “Becoming an AI Product Company”- Don’t make hard-to-unwind decisions based on models’ current capabilities

- Prevailing business models have been fast-follow and acquisition. Both face challenges.

- Tyranny of the UI

- Need to increase the volume, velocity and variety / reduce the risk of regret of experimentation

- Identity and permissions! That’s the thing for agents…

- DESCRIBE A NORTH STAR AND HOW YOU WILL MEASURE PROGRESS TOWARDS IT

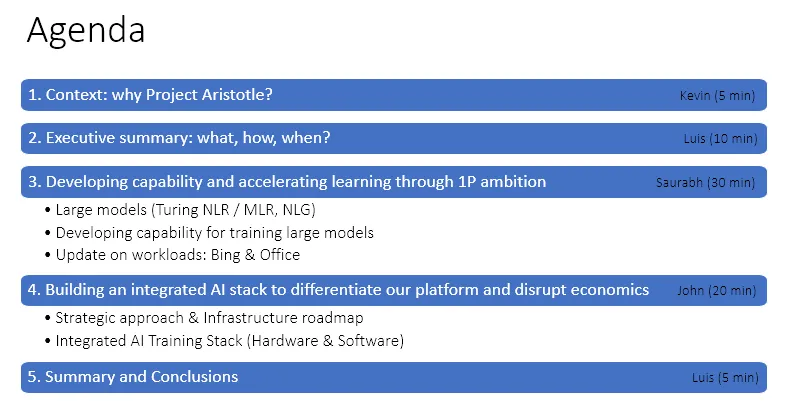

2025-01-16-Thu - Things Aristotle II or Similar Should Do

Section titled “2025-01-16-Thu - Things Aristotle II or Similar Should Do”Aristotle did these things:

- Why do we need it?

- *What is it, and what’s the vision?”

- How will we do it?

- How will we know it’s successful? Early wins?

- What are the risks?

- Compute is intelligence

- And, is all intelligence code?

- Always tilt towards the new mission